Aliaksandr Siarohin

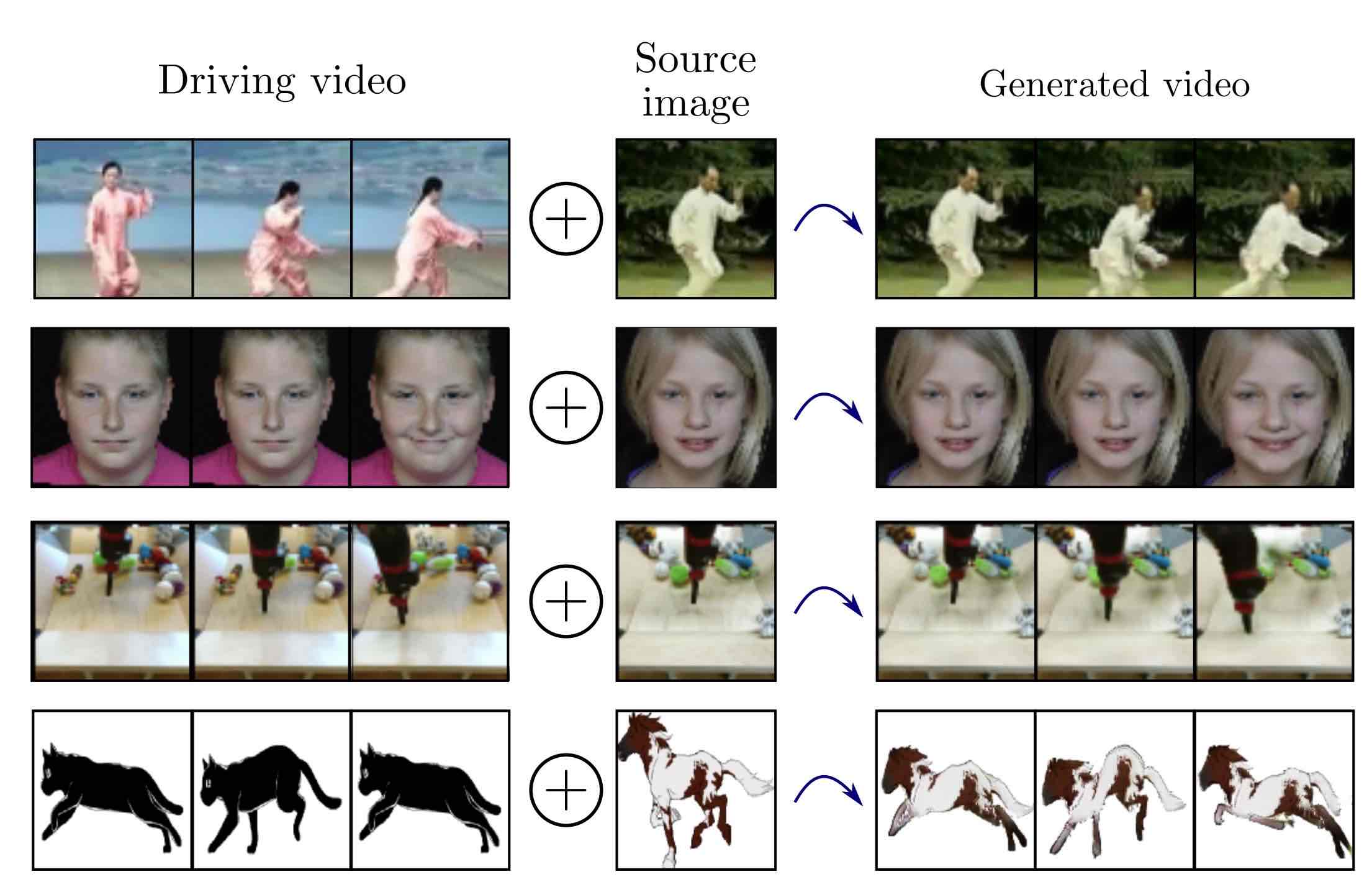

Welcome! I am a Research Scientist working at Snap Research in the Creative vision team. Previosly, I was a Ph.D Student at the University of Trento where I worked under the supervision of Nicu Sebe at the Multimedia and Human Understanding Group (MHUG). My research interests include machine learning for image animation, video generation, generative adversarial networks and domain adaptation.

My work has been published in top computer vision and machine learning conferences. I also did internships at Snap Inc. and Google.

I'm a Snap Research Fellow in 2020.

Contact: aliaksandr

| [Google Scholar] | [GitHub] | [CV] |

Publications:

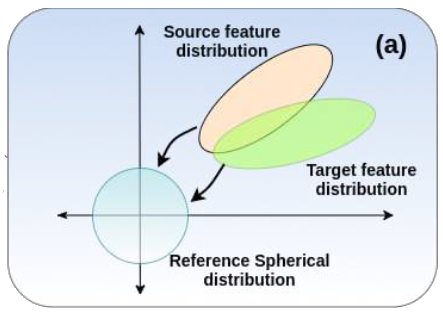

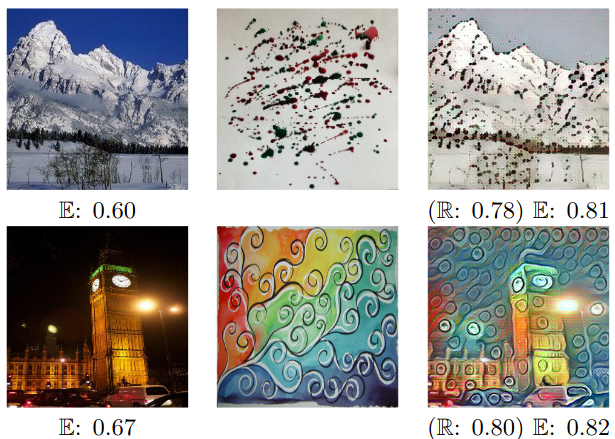

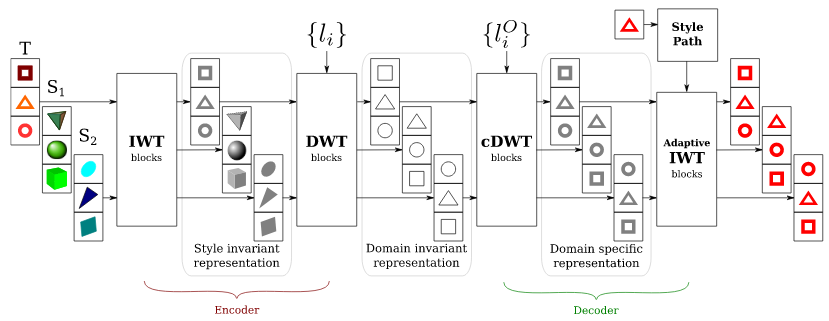

TriGAN: image-to-image translation for multi-source domain adaptation

Subhankar Roy, Aliaksandr Siarohin, Enver Sangineto, Nicu Sebe, Elisa Ricci

Machine Vision and Applications 2021

| [Paper] |

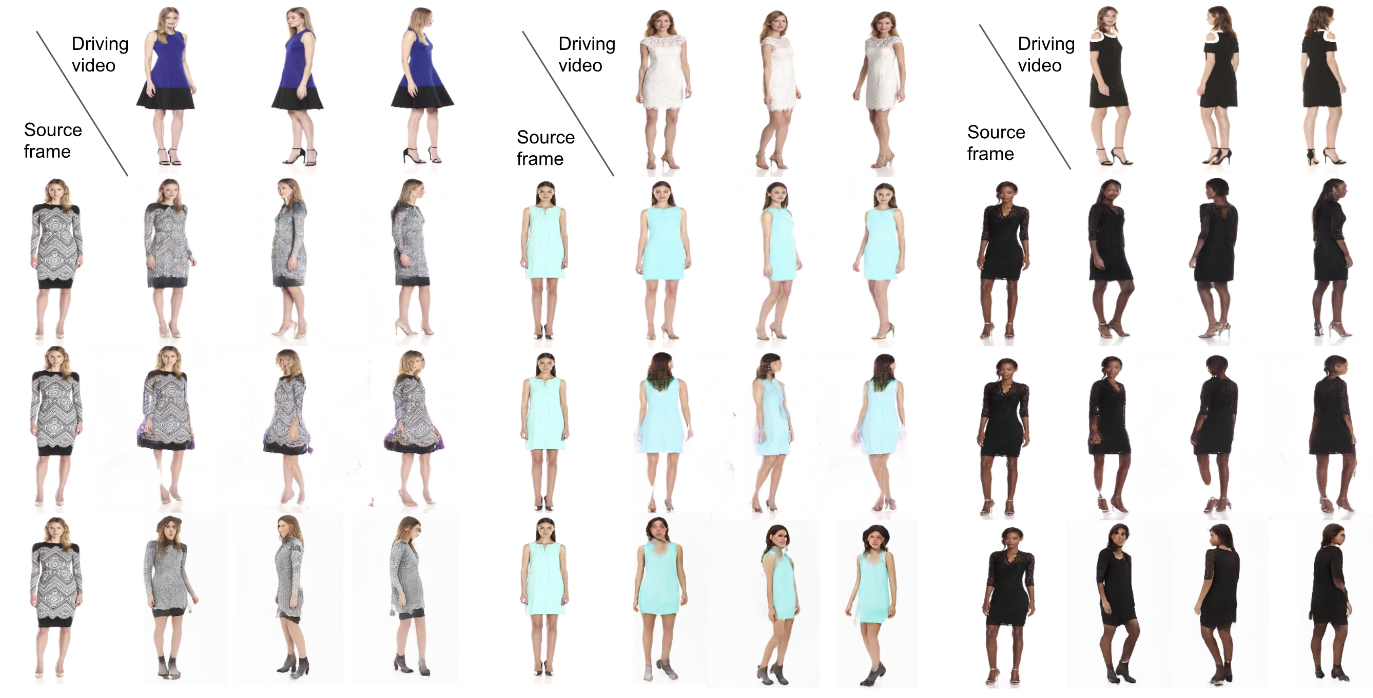

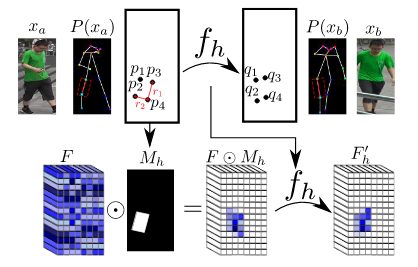

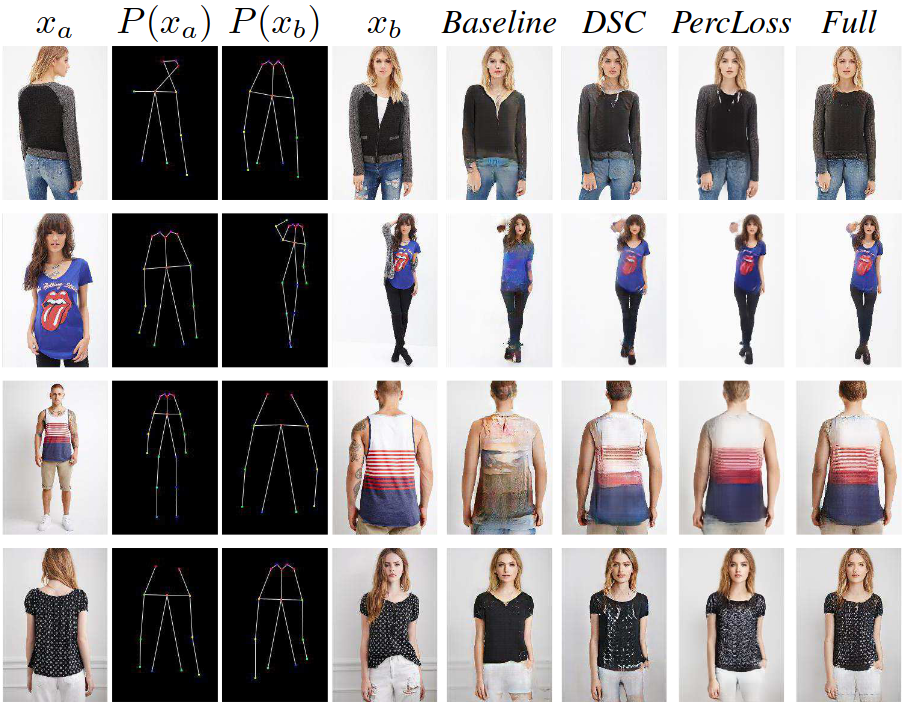

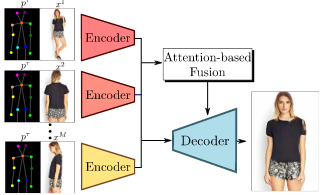

Attention-based Fusion for Multi-source Human Image Generation

Stéphane Lathuilière, Enver Sangineto, Aliaksandr Siarohin, Nicu Sebe

WACV 2020

| [Paper] |